The libvirt driver in the OpenStack Compute service (nova) has supported

instance NUMA topologies for a number of releases. A NUMA topology can be added

to an instance either explicitly, using the hw:numa_nodes=N flavor extra

spec, or implicitly, by requesting a specific mempage size

(hw:mem_page_size=N) or CPU pinning (hw:cpu_policy=dedicated). For

historical reasons, it is not possible to request memory pages or CPU pinning

without getting a NUMA topology meaning every pinned instance or instance with

hugepages (common when using something like Open vSwitch with DPDK) has a NUMA

topology associated with it.

For yet more historical reasons, nova has gained a number of configuration

options that only apply to these instances with NUMA topologies or those

without. This article aims to discuss the implications of one of these,

vcpu_pin_set, through a number of relevant examples.

Overview of vcpu_pin_set

The vcpu_pin_set option has existed in nova for quite some time and describes

itself as:

Defines which physical CPUs (pCPUs) can be used by instance virtual CPUs (vCPUs).

Possible values:

A comma-separated list of physical CPU numbers that virtual CPUs can be allocated to by default. Each element should be either a single CPU number, a range of CPU numbers, or a caret followed by a CPU number to be excluded from a previous range. For example:

vcpu_pin_set = "4-12,^8,15"

This config option has two purposes. Firstly, the placement service uses it to

generate the amount of VCPU resources available on a given host using the

following formula:

(SUM(CONF.vcpu_pin_set) * CONF.cpu_allocation_ratio) - CONF.reserved_host_cpus

(where SUM is the sum of CPUs expressed by the CPU mask).

How we do this can be seen at [1]1, [2]2, [3]3.

The number of VCPU resources impacts instances regardless of whether they

have a NUMA topology or not since potential NUMA/non-NUMA’ness is not

considered at this early stage of scheduling. However, once allocation

candidates have been provided by placement, we see the original purpose of this

option emerge: for instances with a NUMA topology, it is used by

nova-scheduler (specifically by the NUMATopologyFilter) and by

nova-compute (when building instance XML). NUMA instances either map their

entire range of instance cores to a range of host cores (for non-pinned

instances) or each individual instance core to a specific host core (for pinned

instances), and this mapping is calculated by both nova-scheduler’s

NUMATopologyFilter filter and nova-compute vcpu_pin_set is used to limit

which of these host cores can be used and allows you to do things like exclude

every core from a host NUMA node. However, since instances without a NUMA

topology are entirely floating and are not limited to any host NUMA node, this

option is totally ignored.

Examples

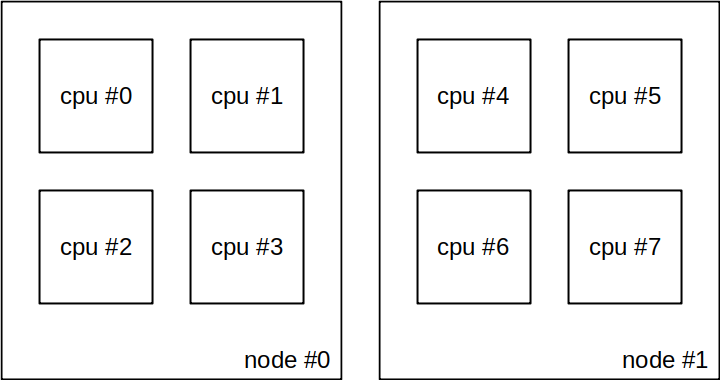

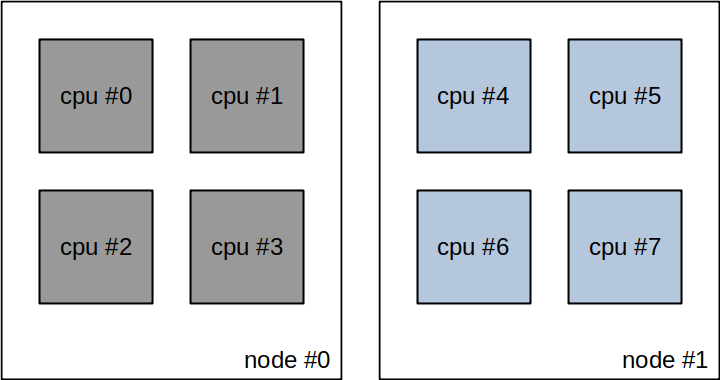

Let’s look at some examples of how this would be reflected in the real world. For all these examples, consider a host with two sockets and two CPUs with four cores and no hyperthreading (so eight CPUs).

The host NUMA topology with two sockets and four cores per socket

We can see the resources that this reports like so:

$ openstack --os-placement-api-version 1.18 resource provider inventory show \

6a969900-bbf7-4725-959b-2db3092933b0 VCPU

+------------------+-------+

| Field | Value |

+------------------+-------+

| allocation_ratio | 16.0 |

| max_unit | 8 |

| reserved | 0 |

| step_size | 1 |

| min_unit | 1 |

| total | 8 |

+------------------+-------+

Non-NUMA

First, consider an instance without a NUMA topology:

$ openstack flavor create --vcpus 2 --ram 512 --disk 0 test-flavor

$ openstack server create --flavor test-flavor ... test-server

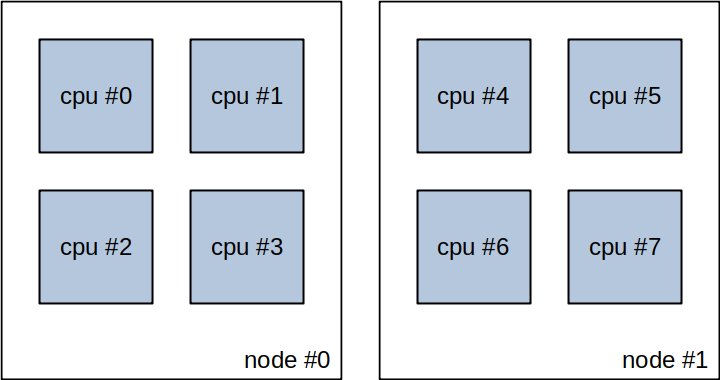

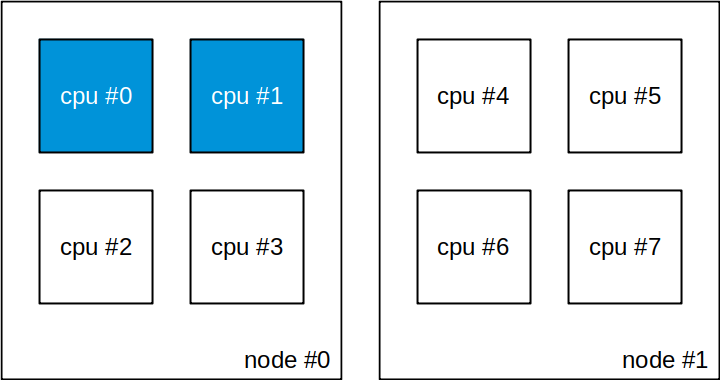

As this instance does not have a NUMA topology, the instance will float across all host cores with no regard for NUMA affinity.

The host NUMA topology is ignored for instances without a NUMA topology.

If we look at what placement is reporting, we can see that our inventory has changed accordingly:

$ openstack --os-placement-api-version 1.18 resource provider usage show \

6a969900-bbf7-4725-959b-2db3092933b0

+----------------+-------+

| resource_class | usage |

+----------------+-------+

| VCPU | 2 |

| MEMORY_MB | 512 |

| DISK_GB | 0 |

+----------------+-------+

Now let’s use vcpu_pin_set to exclude the cores from host NUMA node 0 as

seen in the sample nova.conf below, then restart the service:

[DEFAULT]

vcpu_pin_set = 4-7

If we examine the number of resources available in placement, we can see that it has changed:

$ openstack --os-placement-api-version 1.18 resource provider inventory show \

6a969900-bbf7-4725-959b-2db3092933b0 VCPU

+------------------+-------+

| Field | Value |

+------------------+-------+

| allocation_ratio | 16.0 |

| max_unit | 4 |

| reserved | 0 |

| step_size | 1 |

| min_unit | 1 |

| total | 4 |

+------------------+-------+

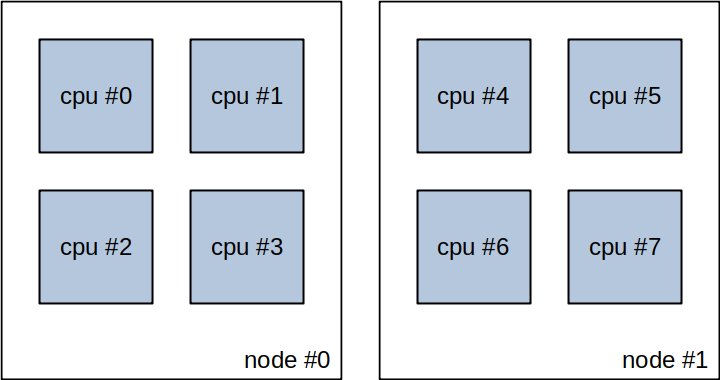

Unfortunately though, because the instance does not have a NUMA topology, this option is completely ignored when actually booting the instance. As above, the instance continues to run across the entire range of host cores.

The vcpu_pin_set option is also ignored for instances without a NUMA

topology.

NUMA, no pinning

Next, consider an instance with a NUMA topology. We can create such an instance like so:

$ openstack flavor create --vcpus 2 --ram 512 --disk 0 test-flavor

$ openstack flavor set --property hw:numa_nodes=1 test-flavor

$ openstack server create --flavor test-flavor ... test-server

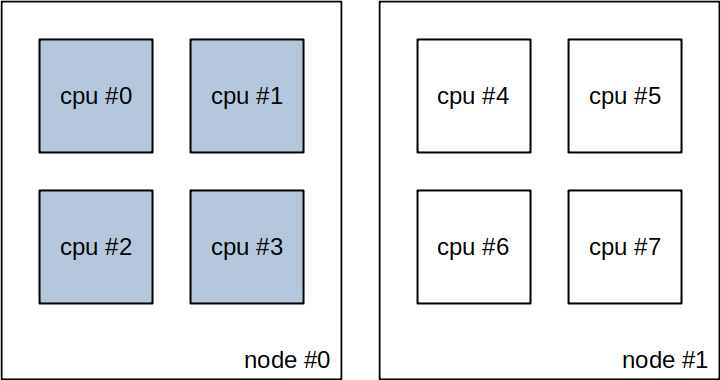

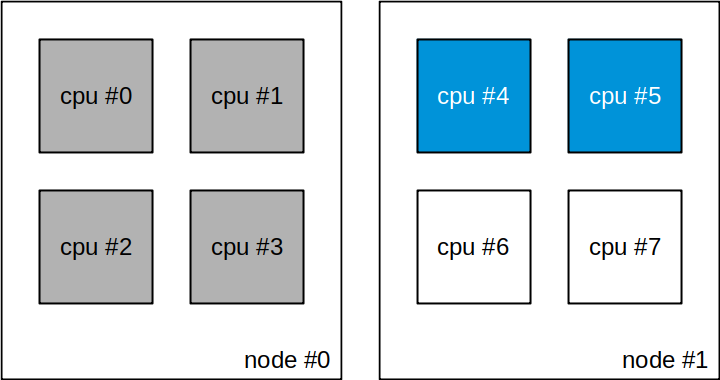

Because this instance has a NUMA topology, the instance will be confined to cores from a single host NUMA node.

The host NUMA topology is considered for instances with a NUMA topology.

Now, once again we’ll use vcpu_pin_set to exclude the cores from host NUMA

node 0 via nova.conf:

[DEFAULT]

vcpu_pin_set = 4-7

And again we’ll see this change in what’s reported to placement:

$ openstack --os-placement-api-version 1.18 resource provider inventory show \

6a969900-bbf7-4725-959b-2db3092933b0 VCPU

+------------------+-------+

| Field | Value |

+------------------+-------+

| allocation_ratio | 16.0 |

| max_unit | 4 |

| reserved | 0 |

| step_size | 1 |

| min_unit | 1 |

| total | 4 |

+------------------+-------+

This time vcpu_pin_set will actually be respected and we’ll see it reflected

in the host cores used by the instance.

The vcpu_pin_set option is respected for instances with a NUMA topology, so

cores 0-3 are excluded.

NUMA, with pinning

Finally, let’s consider pinned instances. We can create such an instance like so:

$ openstack flavor create --vcpus 2 --ram 512 --disk 0 test-flavor

$ openstack flavor set --property hw:cpu_policy=dedicated test-flavor

$ openstack server create --flavor test-flavor ... test-server

As noted previously, these have an implicit NUMA topology but whereas every core of an unpinned instance is mapped to the same range of host cores, the cores of pinned instances are mapped to their own individual host cores.

Because they have a NUMA topology, pinned instances also respect

vcpu_pin_set. As always, we can use vcpu_pin_set to exclude the cores from

host NUMA node 0 via nova.conf:

[DEFAULT]

vcpu_pin_set = 4-7

As always, we’ll see this reflected in placement:

$ openstack --os-placement-api-version 1.18 resource provider inventory show \

6a969900-bbf7-4725-959b-2db3092933b0 VCPU

+------------------+-------+

| Field | Value |

+------------------+-------+

| allocation_ratio | 16.0 |

| max_unit | 4 |

| reserved | 0 |

| step_size | 1 |

| min_unit | 1 |

| total | 4 |

+------------------+-------+

And because pinned instances also have a NUMA topology, we’ll also see this reflected in the host cores used by the instance.

The vcpu_pin_set option is also respected for instances with CPU pinning.

However, it’s worth noting here that pinned instances cannot be overcommited.

Despite the fact that we have an allocation_ratio of 16.0, we can only

schedule total instances cores. These cores also can’t be spread across host

NUMA nodes unless you’ve specifically said otherwise (via the

hw:numa_nodes=N flavor extra spec).

Summary

The vcpu_pin_set option is used to generate the amount of VCPU resources

available in placement but it otherwise has no effect on instances without a

NUMA topology. For instances with a NUMA topology, it also controls the host

cores that the instance can schedule to.